In a recent article by Adobe, gender biases behind digital voice assistants are revealed:

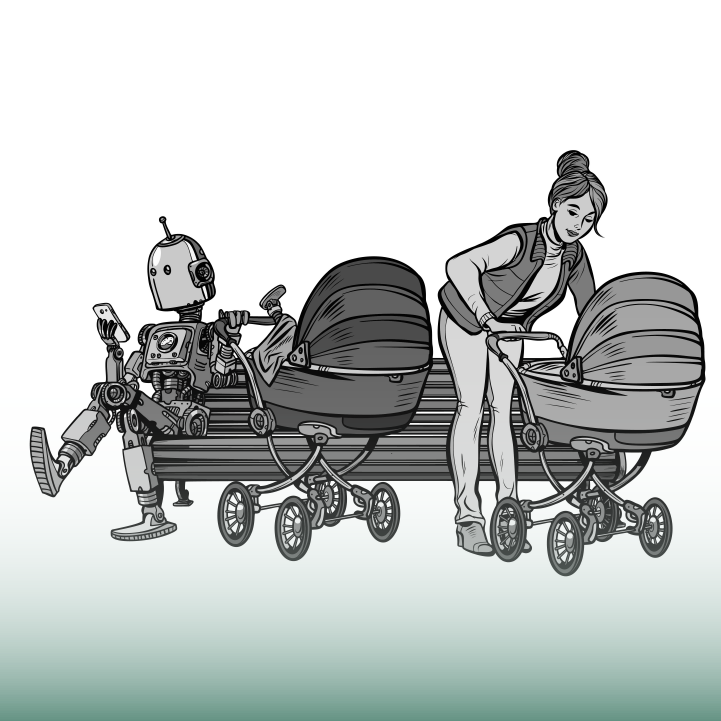

“Many articles from the past year have noted how digital voice assistants are gendered as female, either by default or exclusively. While banking and insurance apps often utilize male voices, reinforcing the male as authoritative, voice assistants for the home, such as Siri and Alexa, are female. These devices are spoken to while cooking, in order to pull up recipes; they set alarms, check the weather, tell time, and send emails. They play secretarial and domestic roles in our lives, and their carefully constructed personas align with traditional notions of homemaking and caretaking as feminine domains.”

How can a digital voice assistant be gendered in the first place? Furthermore, how can it reinforce gender norms?

“The AI engines of leading voice assistants learn how to speak by mining conversational repositories that have been written by teams of people in order to give voice output a human feel. These often vast creative teams are paid to develop in-depth backstories for products, in order to humanize and help the AI express itself in a familiar way. For the most part, as Alexa and her chatty companions are a form of weak AI—what the machines say is largely scripted by a team of humans instead of being created by generative machine learning techniques. Clear themes emerge when you compare the scripts of Alexa, Siri, Cortana, and Google Assistant: All four have coy, submissive personalities. They coddle users, like stereotypical baby-sitters or mother figures. And while a bot’s personality can be stereotyped and gendered, the ingredients to train AI machines can be, too. The most famous example of this was Tay from Microsoft, an artificial Twitter chatbot that began to post racist and sexist tweets as it learned from others (it referred to feminism as a ‘cult’ and a ‘cancer.’)”

Read the full article from Adobe to learn more.